VRTogether was present at the 6th edition of VRDays Europe.

Crédits photos : VRTogether

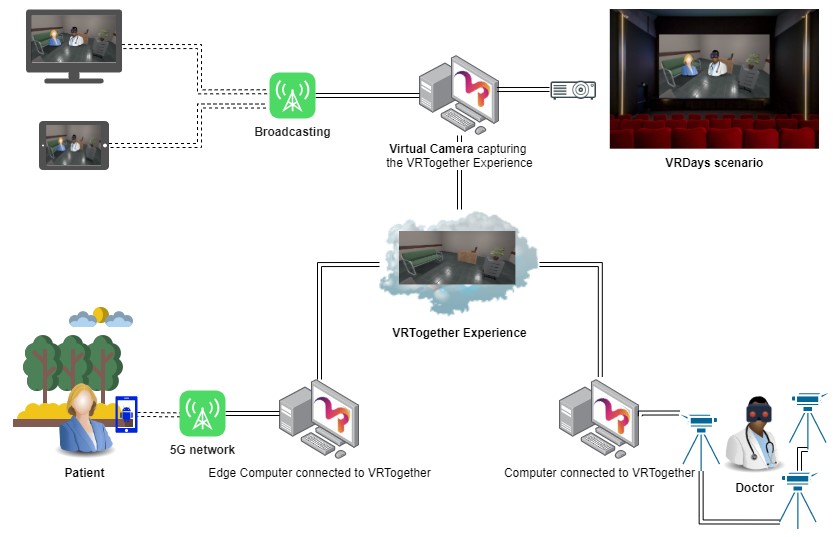

VRTogether was present at VRDays Europe 2020, showcasing the world’s first live 3D video conference, using point clouds, over a commercial 5G network.

VRTogether partners CWI and Sound presented a live demo to demonstrate the potential of the platform for emergency healthcare services. The demo showcased volumetric video conferencing (based on point clouds) between a doctor, in a medical examination room, and an (acting) injured patient outside in the street.

The doctor was captured at the VRDays stage using Azure Kinect cameras. In the same stage the experience was captured by a virtual camera, projected on a big screen and broadcasted in real time. The patient joined the session outside near Science Park in Amsterdam, was captured with a Samsung S20 Ultra 5G smartphone, and streamed over the standard 5G network of KPN in real-time.

The capture application on the phone uses the depth and color cameras to capture depth and color frames. After applying some alignment transformations, a point cloud is computed using the information on both frames. For the demo, we used a depth resolution of 240×180 pixels which turned into an average of 20.000 points per frame. The streaming was performed at 20fps, which turns into a 51.2 Mbits/second stream, too big for streaming over a 4G network (typical uploading velocity around 25Mbits/s), but enough for a 5G network (even at 700MHz).

- Depth resolution: 240×180

- Max points: 20000

- Bytes/point: 16

- Bytes header: 24

- Bytes body: 320000

- Total bytes/frame: 320024

- FPS: 20

- Mbytes/s: 6,40

- Mbits/s : 51,20

Capturing point clouds from a mobile phone

Caracteristics of the 5G Network around Amsterdam’s park

The VRTogether platform was used for orchestrating the experience. In the project we have developed an end-to-end pipeline for the delivery of volumetric video, as point clouds. The platform has three unique characteristics: it provides a real-time experience, so we can explore new forms of communication and collaboration using volumetric video; it allows for optimization mechanism based on the context of interaction and human behavior; and it is extensible so the technical components (capturing, compression, delivery, rendering) can be customized depending on the needs of the interaction. The platform uses an award winning compression algorithm for point clouds developed by the Distributed and Interactive Systems group at CWI.

Article co-vritten with Patrick de Lange, Founder of Sound, partner of the project.