A bot corresponds to a succession of statements between a technological system and a user.

Crédits photos : pch.vector (Freepik)

Without us even realizing it, bots and voice assistants have conquered our daily lives. Do all these voice, call and chat bots generate a simple “wow” effect or are they a great technological achievement? Carole Lailler, Doctor of Language Sciences and consultant in artificial intelligence, gives us the first elements of an answer.

The bot: what is it and how does it work?

We don’t mess with the love of conversation, it is far too important and essential to humans for that. However, it is not uncommon today to interact with a bot. Dialogue systems and other voice assistants, more or less fine, are everywhere:

in a shopping mall to find its way back, it is the voicebot that captures the words of your voice but can remain aphone in return or, on the contrary, vocalize its answer and therefore the itinerary,

on the phone with his insurer to declare a claim, it is the callbot that, despite the crackling of the line, operates like the voicebot and inevitably answers with its more or less sweet voice,

through a screen on the net, it’s the chatbot, the one that is waving at the bottom right because it absolutely wants to help you.

But have we really entered into a conversation with this type of artificial interaction, or are we just trying to act on the world by getting an answer or a service?

Before even questioning the notions of anthropomorphism and situations of enunciation – an identified “I” speaks to a “you” by sharing a few snippets of a universe of reference – it is appropriate to stop for a few moments on the “what” and the “how”, because after all: how does a bot work?

Definition and explanations

First of all, it is absolutely necessary to keep in mind that a bot, no matter how little elaborated, corresponds “simply” to a succession of statements between a technological system and its user. Whether we call it a conversational agent because it is embodied in a metal envelope, or an intelligent assistant because it is a service to others (and then mathematically deduces elements of response from a context), a dialogue system makes it possible above all to talk in natural language without taking the path of a coded galimatias or other abstruse amphigouris. We blab and it answers… without any other form of process and/or constraints!

However, this is a simplistic vision, the reality being quite different: a bot is a technological brick that remains plural… Yes, even without speech recognition as an input or synthesis as an output because the mediation remains only textual, the bot is a chain of treatments.

Not only must a phase of understanding the speaker’s intention be convened (what field, what request, what elements are brought in to find what is being questioned?). But it is also necessary to know where we are in the dialogue (the thank you of the first question is not the one that closes the debate). Finally, you have to think about sending back an answer in one form or another (turning on the light or asserting, with proof, that New Zealand actually has more sheep than inhabitants), on the “right” channel, updating if necessary the “right” box of the database and embellishing (or not) the answer with the “right” additional information and a touch of politeness.

In fact, contrary to popular belief, users use all the politeness formulas they learned when they were younger and maintain a conversational spontaneity that is as disconcerting as it is colourful and sometimes flowery!

The depth of interaction

So, whether you opt for a task-oriented bot using a combination of rules based on precise knowledge and a few statistical models, or whether you choose to focus on interaction through information retrieval methods and neural models: how do you manage to converse without necessarily chatting or appearing intrusive?

Because if we can sense the possible wow effect, it remains that a dialogic system can perfectly fail and give the feeling of lacking discernment. In 1966, the first bot, named Eliza, greatly offended the secretary of its creator, Joseph Weizenbaum, by sending back to her an element of interrogation on a very intimate aspect, her mother. Indeed, this system, built on a simulation of an interview with a Rogerian psychotherapist, had as a fallback solution the emblematic emotional questioning “tell me about your mother”.

When no answer is possible, the dialogue literally comes to an end. Now, a dialogue, need we be reminded, is first and foremost a speaker who talks to an interlocutor. It is an “I” who speaks to a “you” with whom he shares a universe of references. But references move and fluctuate according to our cultures, biases and learning. Just as we must imperiously update the knowledge of a system, each one of us makes a point of forgetting, of crushing old opinions by new ones, of purely & simply erasing or of perpetuating by concatenation.

The human-machine relationship continually in question

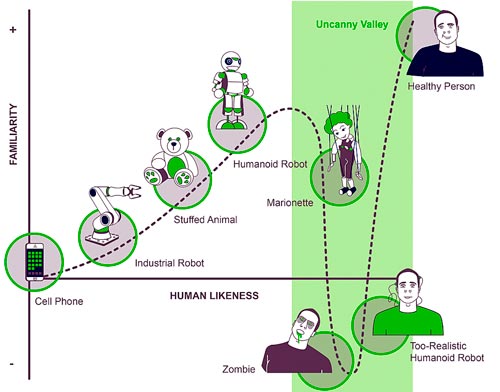

Even when the interlocutor is not oneself, otherness and distance are there. Now, the distance to the other, to the almost-self but not completely ego, is precisely discussed in the scheme of the Uncanny Valley. This “valley of the strange” nicely drawn by the Japanese Mori Masahiro shows to what extent the link to the human questions when it becomes more tenuous: a corpse, a zombie disturb and it is the fall to the bottom of the valley. However, what about a yapping stuffed animal with gaudy pink fur, what about an articulated hand at a time when exoskeletons allow wounded soldiers to walk again?

Similarly, the humanoid robot tends to be less frightening. It now questions less in front of the growing gap. We know more and more that this servant remains limited to its circles of use and obedience.

Of course, the worst case scenarios predict the replacement of humans by these machines, but to what extent: all or part of it? Carrying a heavy load perhaps, knowing how to play Tetris while optimizing maintenance, at a pinch, but conversing? Some will say that many bots pass the famous Turing test. To which I would reply: who passes the test? The bot by its ability to play with me by leaving me in doubt of a possible humanity? Or me, by my lack of discernment linked to my fatigue, my enthusiasm or my more or less feigned credulity? Humans like to flirt with their fears but do not forget to be inventive & to activate their discernment functions.

An impossible effort at banter?

Lately, it is said that the bot hype is fading. Faced with the hubbub of demos more or less coming out of a big-budget American film studio, we are back to a “belt and shoulders” tool closer to the Interactive Voice Server where everything is planned and orchestrated upstream in order to “do the job” or, on the contrary, exacerbate the user’s irritation. Does this mean that a decision tree is outdated?

No, obviously this tree does not hide the forest but tends to make an English garden into a French garden. It aims, in fact, at reducing the problem to a single question for which two answer paths are drawn until only one class remains. The trees can then be the result of human hair pulling, or constructed by an algorithm whose task is to maximize the sharing at each node. This manicheism proves to be very efficient when it is a question of operating on a circumscribed world where the themes are identifiable and identified, where the vocabulary is neither too metaphorical nor too polysemous, where it is a question of rendering a pragmatic service.

On the other hand, if the requests that we wish to satisfy require historical management, complex interactions, mixing of linguistic uses coming from different communities of speakers or writers, convocation of proper nouns, dates, amounts or even a few humorous notes, it is then preferable to use a more sophisticated treatment based on concept detection (or slot filling). In this case, it is a matter of finding the concepts used in the statement in order to better associate them with the mentions in their own linguistic uses.

Behind artificial intelligence, there is data

However, there is only one key word that must be used for these dialogical agents to be truly cognitive: data collection. Indeed, the cognitive is not locked in the techno bricks, whether they are more or less black. It is in the cartographies made upstream, in the survey of language uses through some word and ngram counts, of lexical, acoustic & prosodic characteristics. What type of speakers are we dealing with (age/gender/accents), what are the themes addressed, what vocabulary is used in context, what are the remarkable habits and customs? In short, whether we are trying to feed an expert system or a Transformers-based architecture, trying to separate the wheat from the chaff in the gleaned corpora is essential and remains the key to success.

The evaluation will be simplified since the misunderstandings will be limited to a few words out of the vocabulary and/or a few users with a bad mind. Thus, the metrics will be more clear and the perceptive experiences of the users more targeted. This is a winning combination because it eliminates the risks of overly large corpus biases. The same goes for small talks: these notes of humor, real interactions within the interaction that should not be forgotten; find the semantic traits and commonplaces lent to the company to better play with them (appetite for a bot centered on nutrition, the strike for the one on transportation…)!

Vocal or not, that is the question?

Finally, the bot is a “tool” that calls itself vocal. What exactly is meant by this adjective, which refers to the voice and the physiology of the chords (teeth, tongue, mouth opening and nose are also involved) as well as to the words that we utter with more or less happiness?

When it works with the input signal of the user’s voice (and the surrounding noises that accompany it), a speech recognition brick is de facto added. Unfortunately, and whatever some GAFAMs may say, this is not always easy to set up, especially when it comes to spontaneous speech with its attendant false starts, repetitions, lexical wanderings and disfluencies (the well and other euhhhhs whose length is sometimes disconcerting). Indeed, an Automatic Speech Recognition System has to find, in real time, the words (& almost words) pronounced.

However, the effort is not in vain as long as the language, its target language uses, accents and sounds are modeled. The language model will characterize, without any normative aim, the grammar of an era, a group of speakers, a region or a profession, or all of these at once. The acoustic model, on the other hand, will be dedicated to the open air or the studio, the telephone or the car. It will have to be designed to be robust to the circumstances and to the musicality of the air.

Finally, the phonetization dictionary will play variations to match the letters with the possible pronunciation possibilities, without mocking the user’s accent. Obviously, we can now model all this in a neural End2End, without worrying technically about the tri-partition. However, it will be embedded linguistically & semantically! It will be desirable, in order not to lose words on the way by drowning them in the mass of the most common, to work on its learning corpus beforehand.

What about the vocalization of the bot’s response?

This first addition to the “robotic” processing chain can be coupled with a second one, but the latter remains questionable. For if restoring the reality of a user’s speech from his or her words remains an essential feature for the telephone and in some cases of use (with non-scripting children or allophone speakers, for example), it is not always obvious, on the other hand, to offer a synthesis at the end of the chain. Whether it is for confidentiality reasons (who wants to have their secret code confirmed verbally?) or because the environment does not lend itself to it (who would dare to use a voicebot in an airport for a recording?), the vocalization of the answer is not at all a mandatory step.

All the more reason to take care of it and listen carefully to this little or big voice. Linked to the bot’s Persona but also to the company’s editorial line, it can make the exchange a success or push annoyance to the limit. However, it is “only” the last brick and this inextricable link must be tested and evaluated. Thus, perceptive tests with target users allow us to verify the usefulness and pleasantness of vocalization, to ensure oral legibility without falling into the biases and easiness of the smoothness of the GPS voice. In short, to avoid a sa-bot-age, nothing beats a bot-anist’s eye on the data in ecological conditions.